Rainbow Unit: Networks Big and Small

2A: Digital Internets, Past and Present

Background Knowledge Probe

- What is the first thing that comes to mind when someone says they are on the Internet right now? Is this the same or different from internetworking as we discussed in the last session?

- What quickly comes to mind as the top three transformative advancements that have resulted from the “digital revolution”?

- What might be the concealed stories behind the stock stories regarding these Internet and digital revolution memories?

Some Early Examples of Systems for Networking and Internetworking

Networking

- The exchange of information or services among individuals, groups, or institutions specifically: the cultivation of productive relationships for employment or business

- The establishment or use of a computer network

First known use of networking: 1967, in the meaning defined in sense 1

Merriam-Webster, “Networking”[1]

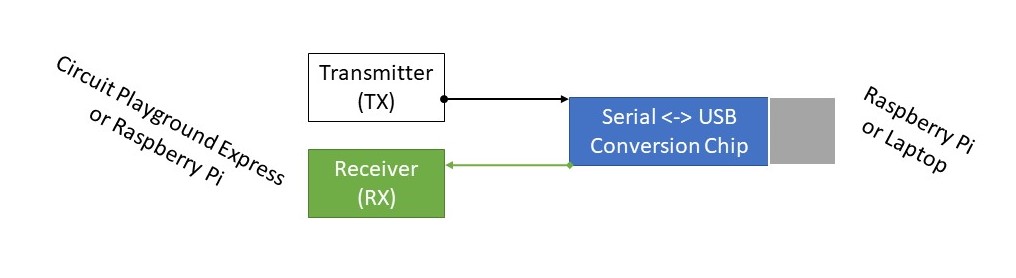

As we have explored throughout the textbook, there are many ways to connect, or network, two devices together. We’ve made use of the Universal Asynchronous Receive Transmit, or UART, protocol with our own devices. We have also briefly reviewed the Inter-Integrated Circuit (I2C), which is a synchronous, multi-parent, multi-worker, packet switched, and single-ended serial computer bus.

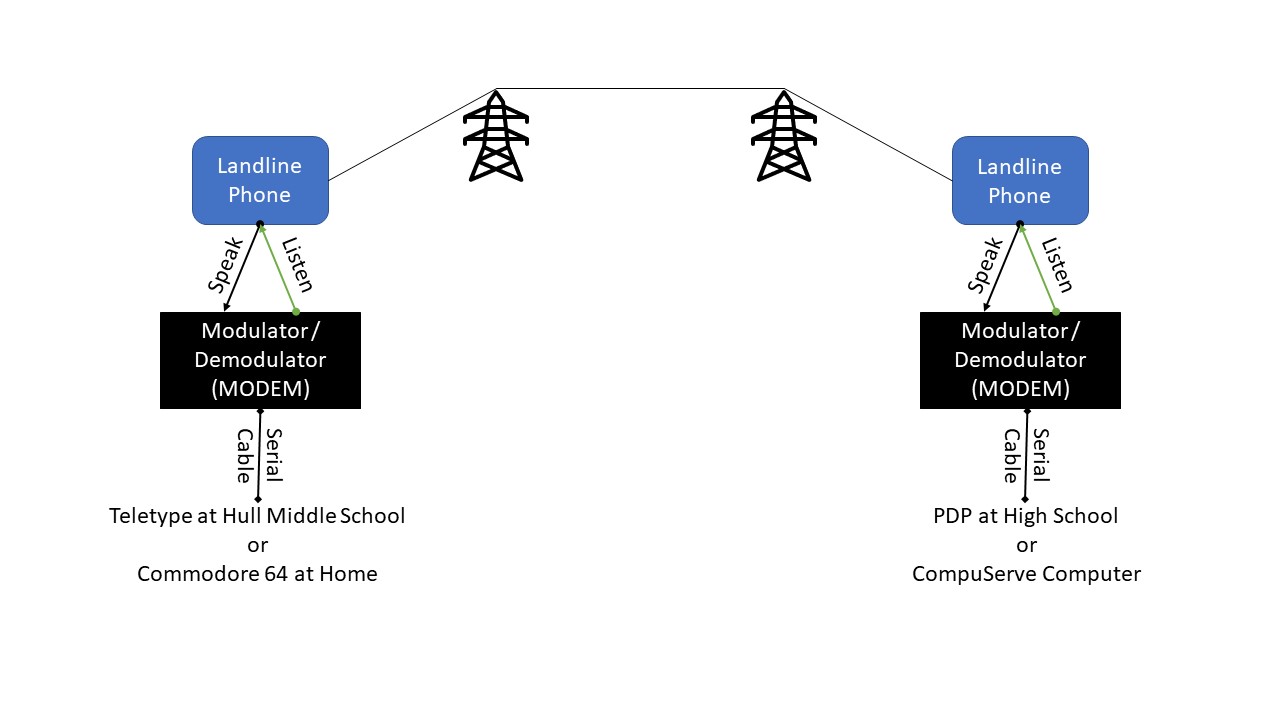

There are many other ways to network devices together, through physical cables (Ethernet, fiber) or varying wavelength frequencies (WiFi, Bluetooth, GPS, infrared, cellular, satellite, low-powered radio). My own history with networking goes back to the 1970s and the growth of radio-controlled model airplanes. While attending Hull Middle School, I used a teletype device and landline phone to dial in to a minicomputer located at the high school, both to play Star Trek video games in my free time and to write my first BASIC programming language code to calculate the board feet of a log for my family’s sawmill. (My dad was not impressed. He preferred continuing to use our analog, hand-held Doyle log rules).

Then in the early 1980s I bought a Commodore 64! The night before I picked it up, I remember using a manual typewriter to complete an English class assignment at Lake Michigan Community College and continually thinking, “This is the last time I will ever need to use whiteout!!” When I got it, I also got a few issues of PC Magazine so that I could read the BASIC programming code stories that I could then enter in and use to play games and to begin creating my own calculating devices for making. And for a short period I also tested out CompuServe, a commercial online service, from which I could join in discussions and download other BASIC programming code. But landline phones were expensive when used to connect from my rural home. So for all of these, there was only one way I effectively internetworked — sneakernet. Sneakernet is an informal term sometimes used tongue-in-cheek to reference those times when the best, or perhaps only, way to transfer electronic data between devices is by physically moving storage media (e.g., magnetic tape, floppy disk, hard drive, USB flash drive) from device to device.

But the early 1980s also saw the emergence of BITNET, an international cooperative network launched by Ira H. Fuchs at the City University of New York and Greydon Freeman at Yale University.[2] It used IBM’s Remote Spooling Communications Subsystem (RSCS) protocol and a network in which data and information at a given BITNET-connected computer was sent to a central mainframe computer, stored, and then forwarded to other mainframe computers. This university-focused network eventually spanned the coasts of the United States and was also joined in 1982 by the European Academic and Research Network, or EARN, providing a means for communications across a broad research community. For me, this first true internetworking as I worked towards my PhD degree at Rutgers, The State University of New Jersey, provided a means to easily email and store and forward research data across campus and between universities. But as with previous networked systems I had used, the primary focus was towards centralized administration and a focus on target audiences. For me to get the support I needed from outside University BITNET members to fix microcontroller or C programming code problems with our supporting small startup businesses, I needed to communicate via landline phone and send digital data and electronics via postal mail.

Emergence of an Open Model for Internetworking

At the same time that commercial service providers such as CompuServe and cooperative university networking systems such as BITNET were emerging with a focus on centralized servers and services, other models for internetworking were also under development. A key design of these open internetworking structures was that each computing device connected to that network has responsibility for reliable processing and delivery of data. This pioneering work came from Louis Pouzin and the French Cyclades Network in the early 1970s.[3] It was created to allow transparency of application-specific features even when the devices are on different types of networks. Pouzin selected Cyclades as an inspirational description of between networks, or internets, from the Greek islands with the same name that form a circle in the Aegean Sea. In his presentation of the Cyclades network to French ministers in 1974, Maurice Allegre provided this metaphor:

One should retain the image [of the Cyclades islands]; the processing centers are still today islands isolated in the middle of an ocean of data, which overwhelms our civilization. Now, thanks to the networks, these islands will be able to connect and thus contribute to a wide circle of information exchanges which will shape the future development of our society.[4]

Ultimately, the French government chose the Transpac telecom monopoly’s plan for centralized control by the network provider within the network of data traffic and quality of service until 2012 before fully transitioning to commercial Internet, something for which the Cyclades open model provided essential inspiration.

However, key features of Cyclades influenced the design of the , another packet switching distributed communications network, this one being developed through the Advanced Research Projects Agency (ARPA) established by the United States Department of Defense in 1966 and officially named ARPANET. ARPA itself was formed as an agency in 1957 with the objective to establish a United States (US) lead in science and technology applicable to the military after the launch of the satellite Sputnik by the Union of Soviet Socialist Republics (USSR). This agency was in large part a continuation of post-World War II high-technology industries of the Cold War period. Like many agencies of the post-war development period, it included extensive basic research by academia and industry for the development of generic infrastructure, protocols, and standards.

One central development based on this unique US open internetworking sociotechnical system was the launch of a system in 1969 to keep unofficial notes on the development of ARPANET as well as peer-reviewed official documents of Internet specifications, communications protocols, and procedures.[5] Today it is overseen by the Internet Engineering Task Force (IETF), founded in 1986 by the Internet Architecture Board (IAB) as part of the United States Department of Defense’s Defense Advanced Research Projects Agency (DARPA). In 1992, it became a part of the Internet Society (ISOC), an American nonprofit organization founded as part of a larger transition of the Internet away from being a United States governmental entity.[6]

Examples of the Request for Comments (RFC) Process

The is one example of how open standards and protocols develop through the RFC process. Its initial development from 1989 to 1992 by Tim Berners-Lee and colleagues happened as a part of their work at CERN in Switzerland. It was first released publicly on USENET newsgroups alt.hypertext, comp.sys.next, comp.text.sgml and comp.mail.multi-media. It wasn’t until 1996 that an informational RFC 1945, HTTP/1.0 was released. This was followed by a proposed standard, RFC 2068, HTTP/1.1 in 1997 and a draft standard, RFC 2616, HTTP/1.1 in 1999.

For comparison, consider the earlier development of the . This protocol evolved through early drafts submitted in 1980 (RFC 772) and 1981 (RFC 780 and RFC 788). It became an internet standard with RFC 821 in 1982.

In both examples, the original standard was iteratively extended as needed to fit contemporary Internet contexts.

Take a few minutes to look at the abstracts of these RFC drafts and standards. Read through the introduction of RFC 2616, HTTP/1.1 (published in 1999) and compare it to the introduction and “The SMTP Model” from RFC 821 (published in 1982). How do these two RFCs differ? How are they the same?

In 1993 I joined the Neuronal Pattern Analysis group at the Beckman Institute, University of Illinois at Urbana-Champaign, as a post-doctoral researcher. One project I was a part of was exploring ways we could share raw data between research labs around the globe using the Internet. At the same time, the University’s National Center for Supercomputing Applications was developing NCSA Mosaic, the first widely used graphical web browser. Participation as part of this research team seeking to collect, store, and share large raw datasets using HTML through our in-house NCSA Mosaic HTTP server meant spending considerable time poring through USENET discussions and through review of web page raw HTML, looking for examples as the protocol was still in early development. And sometimes it meant getting pre-notifications of changes through hallway conversations with those working on Mosaic.

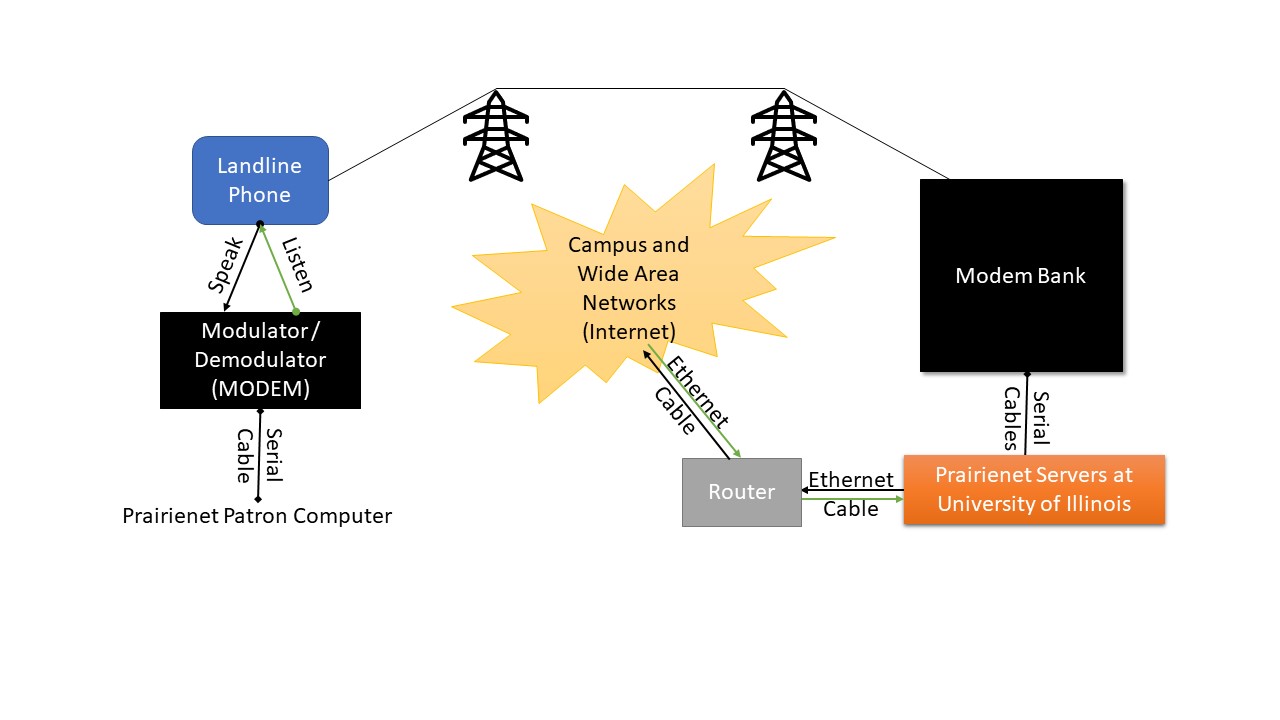

And for me, this journey through systems for networking and internetworking took another radical turn when in 1995 I decided to test the waters outside of the tenure system and neuroscience to join the team developing Prairienet Community Network at the Graduate School of Library and Information Science, University of Illinois Urbana-Champaign.

The Free-net and Community Networking Movements

In 1995, an informational Request for Comments, RFC 2235, was submitted by Robert H. Zakon and the Internet Society. As noted in the introduction, “This document presents a history of the Internet in timeline fashion, highlighting some of the key events and technologies which helped shape the Internet as we know it today.”[7] This includes a listing of many different national and international internetworking organizations and companies, including the Cleveland Free-Net, launched in 1986 by Tom Grundner of Case Western Reserve University.

Cleveland Free-Net used a community-based bulletin-board system (BBS) model in which community forums are hosted by a community server to facilitate the sharing of public information of local citizens online and launched a wider free-net movement. Free-nets made use of local voice-grade landline phone dial-ups to provide free or low-cost access from home, school, and library computers to a multiuser computer within a central location of a community. The phone was connected to a Modulator-Demodulator, or modem, that turned the text data provided from computing devices into the binary zeros and ones being used by that digital network through connection to a remote set of modems connected to the multiuser computer. The multiuser computer itself ran a range of server applications in addition to BBS software and also provided access to the Internet, including USENET with its store-and-forward framework, that together inspired much of the forums that became available through the emergence of the World Wide Web because of HTML and HTTP.

In a speech delivered to the University of Illinois at Urbana-Champaign in 1994, Grundner brought forward the term “cybercasting” to compare Free-nets to “broadcasting.” He goes on to provide the following vision:

Basically, it’s the same service you find in radio or television networks. You might have, let’s say, a local radio station here in Urbana and you might have your own radio talk show hosts or disc jockeys. But you might also be taking feeds from ABC Radio. Similarly, we have independent affiliates who operate their community computer systems, drawing upon local people, local information resources, and so forth. Then we try to supplement that with high-quality feeds from the network level—information services and features that supplement what they’re able to do locally.[8]

For Grundner and the Free-net movement, community computing was about building stronger citizens through community conversations and information sharing, advances in local K-12 education and government two-way interactions, support for small- and medium-sized businesses who could not otherwise afford the services only major corporations could obtain, the agricultural community who are often left out of conversations with county officials and extension agents, and hundreds of local community organizations. Further, while community media historically has made strong use of Public, Educational, and Government access (PEG) cable television channels to express concerns for local issues, engage in democratic debate, and deliver reliable access to information, art, and music, they too found this community computing movement of great value. And with its official grand opening in June 1994, this embodied the inspiration and dreams of Prairienet Free-net, later called Prairienet Community Network, which I joined in the fall of 1995. Other early networks also were being developed and embedded similar principles, including the Community Memory project from the late 1970s, FidoNet and other public bulletin board systems, and other early community networking systems including PEN (Public Electronic Network) and the WELL (Whole Earth ‘Lectronic Link) in California.

Community Networks

Every community has a system of ‘core values’ that help maintain its ‘web of unity’… A community network supports these core values by supporting the information and communications needs of smaller communities within the larger community and by facilitating the exchange of information between individuals within these small communities … and by encouraging the exchange of information among communities.”

Douglas Schuler, “Community networks and the evolution of civic intelligence”[9]

Prairienet

Prairienet is used to make community information available in electronic form and is also used as a free access point to Internet information resources… The fundamental question to be addressed by the research is, “to what extent is Prairienet serving its intended purpose as a community forum for education and information access?”

Gregory B. Newby and Ann P. Bishop, “Community System Users and Uses”[10]

Free-nets and other community networks in part served an essential role at a time when Internet access remained the venue of research projects using the NSFNET-based Internet. In 1995, NSFNET reverted to a research network backbone linking super-computing centers, with United States WAN traffic now routed through interconnected commercial network service providers and backbone providers, opening up full commercial use of the Internet. Throughout the late 1990s and into the mid-2000s, core aspects of Free-nets and centrally-located community computing systems providing dialup access were replaced by commercial Internet Service Providers and larger web service providers. Any Free-nets that remained, such as Austin Freenet, changed their focus to technology training and access to residents of the city. Others, such as Prairienet, ended over a decade of service as the host School of Information Sciences continues its work to foster community networking through graduate and undergraduate education, community inquiry and participatory action research, service-learning and design studios, and mentoring and internships. In so doing, a range of community-based stakeholders strive to bridge silos by coming together to innovate-in-use Internet-based tools for social and democratic participation. At their core, community networks remain a gathering of schools and libraries, of governmental and small and medium businesses, and of a range of community organizations addressing local issues for all sections of the community, thereby advancing community development for a more just society.

The Commercialization of the Internet

During the early 1980s, some commercial vendors began to incorporate Internet protocols into their digital artifacts used on their competitive, private network services, as noted by some of the early Internet developers in their article “A Brief History of the Internet.” Then in 1983, the United States Department of Defense separated from ARPANET to create its own separate network, MILNet. In 1986, the United States National Science Foundation Network (NSFNET) was created, establishing five super-computing centers to provide open high-computing to ARPANET users around the world. Locations included Princeton (John Von Neumann Center, JFNC), Pittsburgh (Pittsburgh Supercomputing Center, PSC), University of California, San Diego (San Diego Supercomputing Center, SDSC), the University of Illinois Urbana-Champaign (National Center for Super-computing Applications, NCSA), and Cornell (Theory Center). In 1988 an NSF-initiated conference at Harvard’s Kennedy School of Government entitled “The Commercialization and Privatization of the Internet” built off of a growing number of initial three-day workshops to begin a broader conversation on the topic. This shortly led to the establishment of the first Interop trade show, started in September of that same year. Vendors also started attending periodic formal meetings of IETF meetings to discuss new ideas regarding Internet protocols. The late 1980s and 1990s also saw a strengthening of US intellectual property rights policies. This “pro-patent” posture opened up possibilities of university faculty-founded firms and establishment of new public-private partnerships.[11] Combined, this established a rich, mutually cooperative evolution of the Internet for a range of research, end user, and vendor stakeholders from a primarily military-funded research program to an internetworking suite available both publicly and privately. And so while other global models of internetworking faded, such as the French Cyclades Network which lost out to corporate monopolies, the US Internet model rapidly expanded through this public-private partnership, some of which was introduced through initial first use via local and regional community networks.

In 1995, the United States National Science and Technology Council’s (NSTC) Committee on Computing, Information and Communications chartered the Federal Network Council (FNC). The NSTC itself is a Cabinet-level council established as Executive Order 12881 in 1993 by President Bill Clinton early in his first term and is part of the Executive Branch’s Office of Science and Technology Policy. The membership of the FNC consisted of one representative from each federal agency whose programs utilize interconnected TCP/IP Internet networks. Its charter was two-fold:

- to provide a forum for networking collaborations among Federal agencies to meet their research, education, and operational mission goals.

- to bridge the gap between the advanced networking technologies being developed by research FNC agencies and the ultimate acquisition of mature versions of these technologies from the commercial sector. This helps build the NII, addresses Federal technology transition goals, and allows the operational experiences of the FNC to influence future Federal research agendas.

RESOLUTION: The Federal Networking Council (FNC) agrees that the following language reflects our definition of the term “Internet.” “Internet” refers to the global information system that — (i) is logically linked together by a globally unique address space based on the Internet Protocol (IP) or its subsequent extensions/follow-ons; (ii) is able to support communications using the Transmission Control Protocol/Internet Protocol (TCP/IP) suite or its subsequent extensions/follow-ons, and/or other IP-compatible protocols; and (iii) provides, uses or makes accessible, either publicly or privately, high level services layered on the communications and related infrastructure described herein.[12]

As we’ve worked through this textbook, we’ve explored the ever-present social shaping of technology in recognition that technologies are not, and can never be, neutral. The systems for internetworking are both deeply technical systems and also deeply social systems. They are sociotechnical artifacts and systems. They have been designed, developed, and implemented within a range of political, economic, social, and cultural structures. The outcomes of this shaping of sociotechnical design and implementation will positively impact and shape some but not others, and also potentially negatively impact and shape some. Remember that tinkering is a research process, one that incorporates various aspects of the methodological landscape. Without incorporating critical paradigms though incorporation of moral and ethical questioning to unmask and expose hidden forms of oppression, false beliefs, and commonly held myths, we continue to advance the giant triplets of racism, materialism, and militarism.

So let’s finish this chapter by exploring a limited government, supply-side, market-oriented think tank that has also been one of the shapers of the sociotechnical Internet, one that has influenced both government policy and technology innovation very differently than that of the Free-net and community networking movement model.

In 1993 The Progress & Freedom Foundation, a nonprofit think tank, was launched. The first paragraph of its mission statement notes:

The Progress & Freedom Foundation is a market-oriented think tank that studies the impact of the digital revolution and its implications for public policy. Its mission is to educate policymakers, opinion leaders, and the public about issues associated with technological change, based on a philosophy of limited government, free markets, and individual sovereignty.

In August 1994, the foundation released the publication “Cyberspace and the American Dream: A Magna Carta for the Knowledge Age.” In its preamble, authors Esther Dyson, George Gilder, George Keyworth, and Alvin Toffler begin by stating:

The central event of the 20th century is the overthrow of matter. In technology, economics, and the politics of nations, wealth in the form of physical resources has been losing value and significance. The powers of mind are everywhere ascendant over the brute force of things.

In a First Wave economy, land and farm labor are the main “factors of production.” In a Second Wave economy, the land remains valuable while the “labor” becomes massified around machines and larger industries. In a Third Wave economy, the central resource a single word broadly encompassing data, information, images, symbols, culture, ideology, and values is actionable knowledge.

The industrial age is not fully over. In fact, classic Second Wave sectors (oil, steel, auto production) have learned how to benefit from Third Wave technological breakthroughs just as the First Wave’s agricultural productivity benefited exponentially from the Second Wave’s farm mechanization.

But the Third Wave, and the Knowledge Age it has opened, will not deliver on its potential unless it adds social and political dominance to its accelerating technological and economic strength.[13]

Soon after this publication, a range of scholars began introducing the terms technolibertarianism or cyberlibertarianism to explore the ways both the political left and right within the United States were beginning to incorporate these central Third Wave concepts of a Knowledge Age introduced within the Magna Carta. In “Cyberlibertarian Myths and the Prospects for Community,” Langdon Winner highlights three key aspects within the Magna Carta:

- technological determinism — changes that are inevitable, irresistible, and world-transforming;

- radical individualism — ecstatic self-fulfillment and the putting aside of anything that might hinder the pursuit of rational self-interest;

- supply-side, free market capitalism as formulated by Milton Friedman.[14]

UK media theorists Richard Barbrook and Andy Cameron explore how the US New Left movement that formed as part of ’60s radicalism — collaboratives with campaigns against militarism, racism, sexual discrimination, homophobia, mindless consumerism, and pollution — and the US New Right — those resurrecting an older form of economic liberalism based on liberty of individuals within the marketplace — came together in a hybrid faith they termed the Californian Ideology. In “The Californian Ideology,” they go on to underline:

“Crucially, anti-statism provides the means to reconcile radical and reactionary ideas about technological progress. While the New Left resents the government for funding the military-industrial complex, the New Right attacks the state for interfering with the spontaneous dissemination of new technologies by market competition.”[15]

More recent work by David Golumbia indicates there remains a strong political voice championed by both the political right and left regarding the liberatory nature of mass computerization and “digital practices whose underlying motivations are often explicitly libertarian.” For instance, Golumbia states:

“We must not mistake the ‘computer revolution’ for anything like a political revolution as various leftist traditions have understood it. The only way to achieve the political ends we pursue is to be absolutely clear about what those ends are. Putting the technological means for achieving them ahead of clear consideration of the ends is not merely putting the cart before the horse; it is trusting in a technological determinism that has never been and will never be conducive to the pursuit of true human freedom.”[16]

In considering the prospects for community given the broad adoption of cyberlibertarianism, Winner concluded by noting: “Superficially appealing uses of new technology become much more problematic when regarded as seeds of evolving, long term practices. Such practices, we know, eventually become parts of consequential social relationships. Those relationships eventually solidify as lasting institutions. And, of course, such institutions are what provide much of the actual framework for how we live together. That suggests that even the most seemingly inconsequential applications and uses of innovations in networked computing be scrutinized and judged in the light of what could be important moral and political consequences. In the broadest spectrum of awareness about these matters we need to ask: Are the practices, relationships and institutions affected by people’s involvement with networked computing ones we wish to foster? Or are they ones we must try to modify or even oppose?”[17]

The last half century has seen a rich exploration of various sociotechnical artifacts and systems for digital networking and internetworking. These tools have provided opportunities to address a range of individual and social issues and meet a range of individual and social needs. The commercialization of the Internet has further opened up amazing possibilities for community networking and the advancement of stronger citizens through community conversations and information sharing, education and government two-way interactions, support for small- and medium-sized businesses, of agriculture, of community media, of Makerspaces, DIY and do-it-together culture, and of many, many more means for advancing civic intelligence. But the social shaping of technology also includes a range of political, economic, and social influencers that have problematically shaped the technologies and our visions for these technologies.

As Eduardo Villanueva-Mansilla notes in “Section 2.3. The early mirage, and the current desert” of his 2020 First Monday paper “View of ICT policies in Latin America: Long-term inequalities and the role of globalized policy-making”[18]:

Inequalities, both new and old, find a home on the Internet, and multiply happily. Commercial giants dominate the conversation and fail to pay their due. Public spheres become toxic and uncontrollable through the tools that exist on the Net[19]. Nonetheless, governments dedicate resources to promote access and discuss policy frames in order to provide an attractive environment to invest. This points to a divorce between the reality of the past 25 years of Internet development, and the imagination of its potential. How to deal with this divorce (or may we call it a divide?) requires an understanding of the ideological perspective behind most of the development/promotional efforts about the Internet. This is especially seen by those undertaken by the government themselves, as it has happened in Latin America and specially in Peru for the past decades.

But we do not stand at an “either/or” inflection point. Internetworking can happen in many shapes and forms which regularly incorporate the Internet suite of protocols and applications. The key is the level to which we enter into use of an Internet-based tool from a ‘thing-oriented’ compared to a ‘person-oriented’ framing. A dominant narrative is centered within ‘thing-oriented’ framings such as cyberlibertarianism. But as we work to open new critical lenses that help us see the ‘person-centered’ counterstories past and present, and enter into communities of practice across difference using collective leadership and community inquiry, we begin to dismantle the many webs of oppression within these sociotechnical systems while effectively using carefully selected aspects to achieve social justice outcome goals, as Judy Wajcman notes in this video segment on the impact of digital technology.

Lesson Plan

In this session we begin our journey exploring the Internet and other digital internets which serve as the base of many of our Networked Information Systems today. The history of internetworking, the range of organizations that helped in its design, and the influence specifically of the United States in shaping and codifying these designs is important to remember as we move forward in the Rainbow Unit. The mutual shaping, past and present, is both significantly solidified within the black boxes that are our daily smart devices used within our smart homes and smart cities, and also highly fluid within our tinker, maker, and DIY innovation-in-use spaces.

Essential Resources:

The essential resources for this extended session chapter are many and varied.

First, skim these in connection with related sections of this chapter, bringing in a critical mindset to tease this sociotechnical review apart a bit further as it relates to the broader themes of this textbook. With this as an entry point, carefully choose two or three of these to delve further into the ways we are not confronting our ignorance on the various conflicting impacts based on the social shaping of technologies, but rather the ways our hidden intelligent practices have impacted other individuals and communities.

- Histories of open models for internetworking

- Russell, Andrew L., and Valérie Schafer. “In the Shadow of ARPANET and Internet: Louis Pouzin and the Cyclades Network in the 1970s.” Technology and Culture 55, no. 4 (December 3, 2014): 880–907. https://doi.org/10.1353/tech.2014.0096.

- Leiner, Barry M, Vinton G. Cerf, David D. Clark, Robert E Kahn, Leonard Kleinrock, Daniel C. Lynch, Jon Postel, Larry G. Roberts, and Stephen Wolff. “A Brief History of the Internet.” ACM SIGCOMM Computer Communication Review 39, no. 5 (October 2009): 22–31. https://doi.org/10.1145/1629607.1629613.

- Zakon, Robert H. “Hobbes’ Internet Timeline.” Accessed July 16, 2020. https://tools.ietf.org/html/rfc2235.

- Free-nets and Community Networking

- Grundner, Tom. “Seizing the Infosphere: An Alternative Vision for National Computer Networking.” Graduate School of Library and Information Science, University of Illinois at Urbana-Champaign, 1994. http://hdl.handle.net/2142/365.

- Peter Day. “Community Networks.” Public Sphere Project. Accessed July 16, 2020. http://publicsphereproject.org/content/community-networks-1.

- Schuler, Douglas. “Community networks and the evolution of civic intelligence.” AI & Society 25, no. 3 (August 2010): 291-307. https://doi.org/10.1007/s00146-009-0260-z.

- Commercialization of the Internet

- Leiner, Barry M, Vinton G. Cerf, David D. Clark, Robert E Kahn, Leonard Kleinrock, Daniel C. Lynch, Jon Postel, Larry G. Roberts, and Stephen Wolff. “A Brief History of the Internet.” ACM SIGCOMM Computer Communication Review 39, no. 5 (October 2009): 22–31. https://doi.org/10.1145/1629607.1629613.

- Mowery, David C., and Timothy Simcoe. “Is the Internet a US Invention?—An Economic and Technological History of Computer Networking.” Research Policy 31, no. 8 (December 1, 2002): 1369–87. https://doi.org/10.1016/S0048-7333(02)00069-0.

- The Magna Carta and Cyberlibertarianism

- Dyson, Esther, George Gilder, George Keyworth, and Alvin Toffler. “Cyberspace and the American Dream: A Magna Carta for the Knowledge Age.” The Progress & Freedom Foundation, August 1994. http://www.pff.org/issues-pubs/futureinsights/fi1.2magnacarta.html.

- More on the Progress and Freedom Foundation can be found on the foundation’s website, which remains as an archive. Consider especially their “About” page, which includes their mission statement and statement of who they are, and list of “Supporters” as of 2009.

- Winner, Langdon. “Cyberlibertarian Myths and the Prospects for Community.” ACM SIGCAS Computers and Society 27, no. 3 (September 1, 1997): 14–19. https://www.langdonwinner.com/other-writings/2018/1/15/cyberlibertarian-myths-and-the-prospects-for-community.

- Barbrook, R. and A. Cameron. “The Californian Ideology.” Science as Culture 6, no. 1 (January 1, 1996): 56. https://www.tandfonline.com/doi/abs/10.1080/09505439609526455.

- Golumbia, David. “Cyberlibertarians’ Digital Deletion of the Left.” Jacobin Magazine, December 4, 2013. https://www.jacobinmag.com/2013/12/cyberlibertarians-digital-deletion-of-the-left/.

Additional Resources:

- Look for one or two current articles related to applications of the Sociotechnical Internet today. For instance, in May 2020, The Intercept published Naomi Klein’s article “Screen New Deal: Under Cover of Mass Death, Andrew Cuomo Calls in the Billionaires to Build a High-Tech Dystopia.” This article is the first installment in an ongoing series about the shock doctrine and disaster capitalism in the age of Covid-19, highlighting a continuing belief amongst tech billionaires and many others in Silicon Valley that there is no problem that technology cannot fix — and that this provides them a golden opportunity for deference and power that has been unjustly denied to them.

Key Technical Terms

- The , , , , , frameworks, and principles that are core to the Internet

- Basic components used to build a network, including:

- Node identification using and address,

- Network Interface Controllers (NICs) for wired , wireless Ethernet card, , or Optical Network Terminals (ONT) for fiber optics devices.

- used to interconnect devices, including coaxial copper, twisted pair copper, and fiber optics cables, and radio wave transmissions

- The , such as a wireless Ethernet (Wi-Fi) access point, wired Ethernet , and the used to interconnect the different devices for each local network to create a larger network of networks

- The different levels of internetworking of multiple devices and of multiple networks of devices, including , , Campus (CAN) and , and

- Internet service provider technologies, including , , , Satellite-Based Internet, and

- The IP addressing and naming process and procedures, including , the , Network Address Translation (NAT), and Internet Protocols version 4 and version 6

- and its relation to the formal , standards, protocols, and procedures of the

- Troubleshooting tools, including , , , dig, ifconfig/ipconfig, and speedtest. NOTE: You are encouraged to explore the Back Matter chapter Network Troubleshooting for more details on these.

Professional Journal Reflections:

In the Blue Unit chapter 2A: The Methodological Landscape we explored the assumptions that serve as a landscape for the three major research paradigms within Western science today. For those working within positivist paradigms, the purpose for research is to discover regularities and causal laws so that people can explain, predict and control events and processes. For those working within the interpretive paradigms, the reason for research is to describe and understand phenomena in the social world and their meanings in context. And for those within the critical paradigms, the reason for research is to empower people to change their conditions by unmasking and exposing hidden forms of oppression, false beliefs and commonly held myths. We’ve also discovered that the deductive reasoning or logic used within positivist paradigms is essential for tasks such as the writing of programming code which goes on to shape development of machine learning and artificial intelligence. As we saw in the Blue Unit, though, writing instructions integrated into education using inductive reasoning can lead to errors in the instructions which themselves need to be done using deductive logic as was seen in writing the first three notes of the middle-C scale as part of 2B: Make Music with Code.

Throughout the book, another core assumption is drawn from critical paradigms which acknowledge that any research is a moral-political and value-based activity which require us to explicitly declare and reflect on their value position(s) and provide arguments for their normative reasoning. As Richard Milner notes, it is important within critical research for us to research the self, research the self in relation to others, to take part in engaged reflection to think what is happening in a particular research community, and to shift inquiry from self to system. I have found this to be relevant as much within tinkering as research as it is within formal research in academia and the business sector.

Thus, the goal of this week’s Professional Journal Reflection is to help bring together reflections of our self into conversation with our inquiry into the Internet past and present to advance a more holistic understanding of networked information systems which is shaped by, and which shapes, the relation of self and others.

- Using a positivist paradigm lens, note several key technical takeaways from the chapters and essential resources from this session of the Rainbow Unit.

- Using an interpretivist paradigm lens, move from the simple nuts and bolts to note several evocative insights from this session of the Rainbow Unit.

- From a critical paradigm lens and acknowledgement of a non-neutral stance, what mutual shaping has influenced the development and use of the Internet and major sociotechnical artifacts that are parts of our personal and professional lives? With our professional engagement with others around us near and far?

- What first thoughts do you have on how we can work together to actively decodify key terms and concepts related to networked information systems to better understand ways in which the oppressive components within these can be countered moving forward?

- Merriam-Webster, “Networking,” accessed June 2, 2020, https://www.merriam-webster.com/dictionary/networking. Emphasis added. ↵

- This is not to be confused with the Bitnet of Things Gershenfeld references as the counterpoint to the Internet of Things which he has championed and which was discussed in chapter 1A of the Rainbow Unit. Learn more about historic BITNET. ↵

- Andrew L. Russell, and Valérie Schafer, “In the Shadow of ARPANET and Internet: Louis Pouzin and the Cyclades Network in the 1970s,” Technology and Culture 55, no. 4 (December 3, 2014): 880–907. https://doi.org/10.1353/tech.2014.0096. ↵

- Russell and Schafer, “In the Shadow of ARPANET and Internet,” 886. ↵

- The current list of Requests for Comment are available at their website. ↵

- Learn more about the launch of the Internet Society on their website. ↵

- Robert H. Zakon, “Hobbes’ Internet Timeline,” accessed July 16, 2020. https://tools.ietf.org/html/rfc2235. ↵

- Tom Grundner, “Seizing the Infosphere: An Alternative Vision for National Computer Networking,” Graduate School of Library and Information Science, University of Illinois at Urbana-Champaign, 1994. http://hdl.handle.net/2142/365. ↵

- Douglas Schuler, “Community networks and the evolution of civic intelligence,” AI & Society 25, no. 3 (August 2010). https://doi.org/10.1007/s00146-009-0260-z. ↵

- Gregory B. Newby and Ann P. Bishop, “Community System Users and Uses,” Proceedings of the ASIS Annual Meeting (1996): 1-2. https://web.archive.org/web/20220213055143/https://petascale.org/papers/asispnet.pdf. ↵

- David C. Mowery and Timothy Simcoe, “Is the Internet a US Invention?—An Economic and Technological History of Computer Networking,” Research Policy 31, no. 8 (December 1, 2002): 1386. https://doi.org/10.1016/S0048-7333(02)00069-0. ↵

- Federal Networking Council, “FNC Resolution: Definition of ‘Internet’,” October 24, 1995. https://www.nitrd.gov/historical/fnc/internet_res.pdf. ↵

- Esther Dyson, George Gilder, George Keyworth, and Alvin Toffler, “Cyberspace and the American Dream: A Magna Carta for the Knowledge Age,” The Progress & Freedom Foundation, August 1994. http://www.pff.org/issues-pubs/futureinsights/fi1.2magnacarta.html. ↵

- Langdon Winner, “Cyberlibertarian Myths and the Prospects for Community,” ACM SIGCAS Computers and Society 27, no. 3 (September 1, 1997): 14–19. https://www.langdonwinner.com/other-writings/2018/1/15/cyberlibertarian-myths-and-the-prospects-for-community. ↵

- R. Barbrook and A. Cameron, “The Californian Ideology,” Science as Culture 6, no. 1 (January 1, 1996): 56. https://www.tandfonline.com/doi/abs/10.1080/09505439609526455. ↵

- David Golumbia, “Cyberlibertarians’ Digital Deletion of the Left,” Jacobin Magazine, December 4, 2013. https://www.jacobinmag.com/2013/12/cyberlibertarians-digital-deletion-of-the-left/. ↵

- Winner, “Cyberlibertarian Myths and the Prospects for Community.” ↵

- Villanueva-Mansilla, E. (2020). ICT policies in Latin America: Long-term inequalities and the role of globalized policy-making. First Monday, 25(7). https://doi.org/10.5210/fm.v25i7.10865. ↵

- David Nemer, 2018. “The three types of WhatsApp users getting Brazil’s Jair Bolsonaro elected: WhatsApp has proved to be the ideal tool for mobilizing political support — and for spreading fake news,” Guardian (25 October), at https://www.theguardian.com/world/2018/oct/25/brazil-president-jair-bolsonaro-whatsapp-fake-news. Accessed April 25, 2023. ↵

A computing structure in which individual devices can share information and resources directly without relying on a dedicated central server. Each device can perform a mix of resource provision (e.g., computation, storage, and networked sharing) and resource requesting (e.g., web page, new email or social media posts) tasks. Compare to client-server architecture, in which the client device performs requests while a server device performs resource provision.

A telecommunications technique in which information is stored at each intermediate node on the path to a specified destination.

According to this principle, network features should be implemented as close to the end nodes of the network as possible. Everything that can be done within the client or server application should be done there. Only those things that interconnecting devices must do should be done there.

A key communications protocol for routing data within networks, thus enabling the Internet. This protocol delivers packets from a sender to the destination and requires IP addresses for routing these packets. Domain Name Server (DNS) services are used to associate IP names with specific IP addresses.

RFCs are technical and organizational notes about the Internet and cover many aspects of computer networking, including protocols, procedures, programs, and concepts.

The ever-present application layer protocol used to request and access media (such as webpages) from across the Internet. Website URLs begin with “http” or “https” to signify this protocol (the added ‘s’ denotes the secured HTTP protocol, which is increasingly common). The Hypertext Transfer Protocol is part of the Internet protocol suite.

As part of the Internet protocol suite, SMTP is a communications protocol for email servers and clients. SMTP is used to send and receive email.

The principle that networks begin and end with local area networks (LANs). The other types of area networks, including the wide area networks that comprise the Internet, then serve as a bridge between the local area networks.

A computing structure which separates the work between a resource provider, or server, and a resource requestor, or client. The client-server model is distinct from centralized computing, in which servers reside in a central location or a set of regional locations. It is true that when there are many simultaneous client programs initiating requests, the server computer program(s) providing resources and services resides on centralized computer hardware called a server, specifically designed for computation, storage, and networked sharing. That is, the hardware called a server is just a computer specially designated to run one or more different applications designed to provide the server side of the client-server protocol.

Media access control (MAC) addresses are unique identifiers assigned to network interface controllers (NICs) as part of the data link layer of the OSI model.

The hardware necessary for a node to connect to a network. For example, an Ethernet card (wired or wireless) is used for a LAN connection. A modem (cable, DSL, dialup) is used for traditional Internet. Optical network terminals (ONTs) are used for fiber to the home.

An Ethernet port is an Institute of Electrical and Electronics Engineers (IEEE) standard connector for 100BASE-Tx/1000BASE-T full duplex Fast and Gigabit wired Ethernet communications, using twisted pair CAT-5 and CAT-6 copper cabling.

A portmanteau of the words Modulator-Demodulator. This device converts data provided from computing devices into binary zeros and ones so that it can be transmitted through a network.

Media are used to interconnect devices on a network, and are made of four primary materials: coaxial copper cable, twisted pair copper cable, fiber optics cable, and radio waves.

A device used to connect nodes together. A switch or hub is used with wired Ethernet, an access point is used for wireless Ethernet (WiFi), and a router or gateway builds an Internet by connecting different LANs together.

Electronic switches are used to control the flow of current on a circuit. They can be used to switch between the closed position, in which a current continues its flow through a circuit, and the open position, in which the current is not passed through. A classic example of this type is a light switch, in which a closed position would turn on a light and an open position would turn off that light. Closing the switch completes the circuit. Other switches are used to control the amount of current that flows across the circuit. A classic example of this type is a dimmer switch for a light used to brighten or dim the brightness of a light.

While the above are common mechanical switches, it is also possible to use programming code to switch the flow of current along a circuit. A common method for doing this is through use of a transistor, which uses a low current signal to one leg of the transistor provided by the program to determine the flow of a larger amount of current through the transistors other two legs.

A router is an interconnect device used to transfer data from one local area network (LAN) to another LAN connected to the router.

Personal area networks (PANs) provide a simple computer network organized around a few personal devices, allowing the transfer of files, photos, and music without the use of the Internet or your home's local network. Two common examples would be your Bluetooth headset or your keyboard and mouse. Depending on the Bluetooth range selected (or chosen for you), this could span 3 feet, 10 feet, or 100 feet. Beyond Bluetooth, other common PAN connectivity includes Infrared (IR), USB, ZigBee, Wi-Fi, and radio frequency (RF, including short-distance AM and FM radio).

The simplest type of Internet-based area networks is a local area network (LAN). A LAN is a network with connected devices in a close geographical range. It is generally owned, managed, and used by people in a building. For example, connecting to a Wi-Fi network at a coffee shop or library would mean that your device would be a node on the coffee shop or library's publicly accessible LAN. Many businesses and institutions have a second, private LAN for use by staff only.

A collection of LANs and devices in an area the size of a city.

Covers the size of a state, country, and could even be considered to include the entire Internet. A WAN is comprised of many interconnected MANs and LANs. The WAN that is used to internetwork these is typically owned and managed by one or more Internet service providers (ISPs: the business that provides connections to each LAN), network service providers (NSPs: the business(es) that provide connections between ISPs), and backbone providers (the business(es) that provide the more extended connections between NSPs).

Adds two channels to standard phone line for Internet. Hub and spoke (dedicated line) topology; full duplex. In the U.S., DSL prioritizes download speeds.

Redirects a cable channel to be used for Internet. Neighborhood shares bus topology. In the U.S., cable internet prioritizes download speeds.

3G adds the EV-DO (Verizon, Sprint/Nextel) or HSDPA (AT&T, T-Mobile) protocol to cell voice's protocol. 4G adds the WiMax (Sprint) or LTE (Verizon, AT&T) standard to cell's voice protocol. Equivalent to bus (shared) topology. Prioritizes download speeds.

Ultra-high-speed communications technology with one or more channels for Internet. Hub and spoke (dedicated) topology with synchronous upload and download speeds.

A naming system which translates domain names to IP addresses. This ensures a consistent name space for information resources.

As part of the Internet protocol suite, DHCP is a network management protocol. DHCP servers dynamically or statically assign IP addresses to connected nodes on the local area network (LAN) so that they can communicate with other IP networks.

Cloud computing is a form of client-server architecture, where a distributed network of data centers ensures the regular availability of computer storage and power, which is accessed over the Internet.

Often called "specs," specifications are formalized practices created using accredited technical standards developed and adopted using an open consensus process under guidelines of a standards body, or using de facto technical standards developed and owned by a single group or company.

LED light found on wired Ethernet cards and switches. When lit, the lights indicate that the card and switch are on and working properly and that the Ethernet cable is plugged in and working properly.

Run from the command line or terminal application to test if you can communicate with another node.

Run from the command line or terminal application to test the performance of each router between nodes.